Echoes Beneath Ice

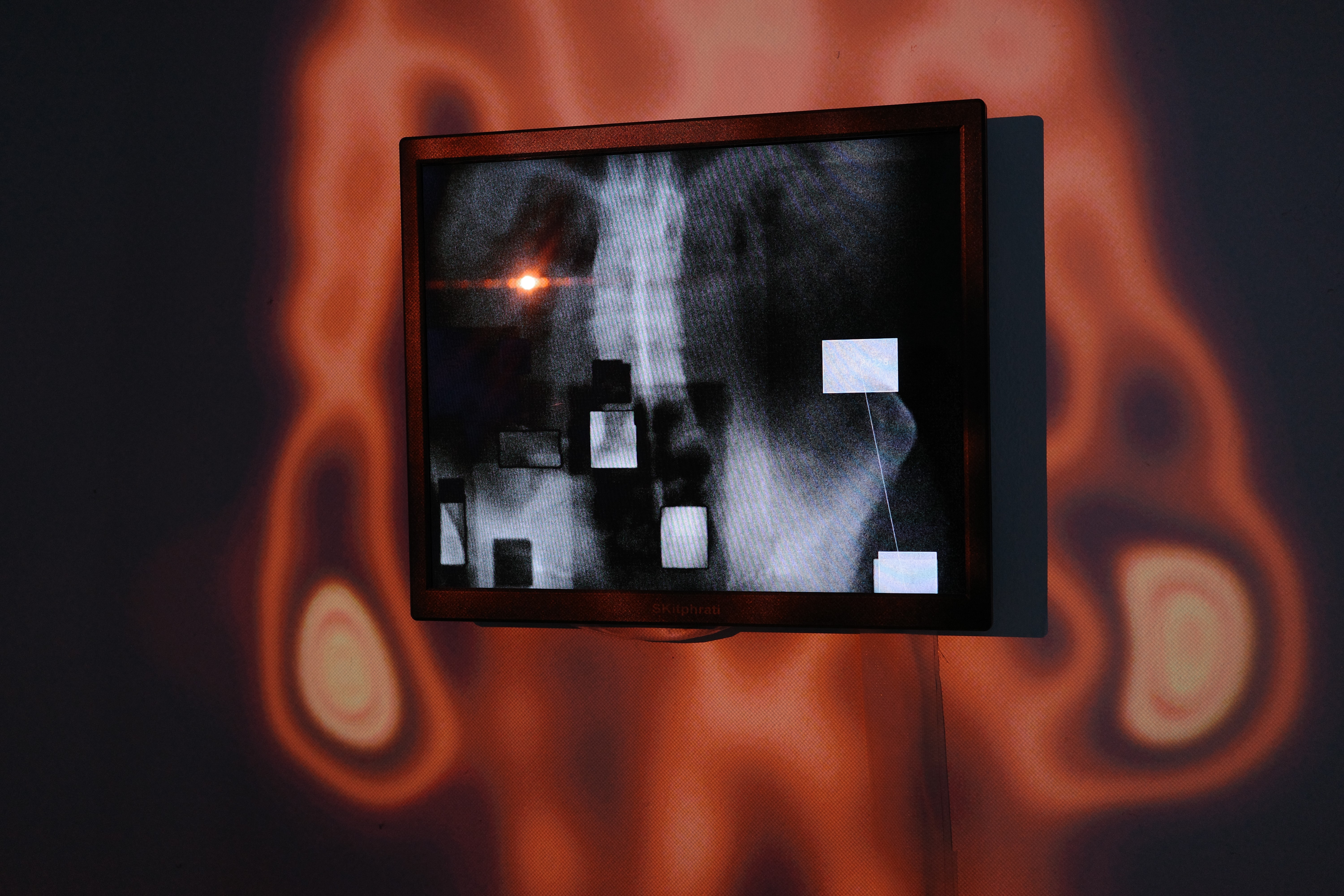

Echoes Beneath Ice is a computational art project that transforms microscopic ice core images into a generative audiovisual experience. By training a StyleGAN model on the visual patterns of air bubbles and cracks, and translating brightness structures into sound, the project reconstructs the hidden rhythms embedded in ancient climates.

Through human interaction and machine generation, ice cores are reimagined as active agents, offering not a static record of the past but a living, resonant network of memory, time, and perception.

Through mouse interaction, viewers participate in the reweaving of time, space, and memory—listening to the pulse of ancient air, the density of cracks, and the imagined breath of past climates. In this co-performance between human and machine, the ice core becomes not a static record, but a living network shaped by memory, perception, and temporal flow.

So, what are we truly hearing—the rhythm of time, or the imagination of an algorithm?

Ice core slices are temporal containers of Earth’s climate. Each layer preserves microbubbles that record ancient air, dust, and temperature. These are not silent relics, but non-human actants—material witnesses to planetary change.

If ice layers could not only be seen, but heard—what stories might they tell?

Echoes Beneath Ice is a generative audiovisual project that explores how contemporary AI co-performs with non-human matter to construct a “listenable history.” Using microscopic images of ice core slices, the project trains a StyleGAN model to generate interpolated videos. These are scanned, transforming the structure and density of bubbles into rhythm and frequency.

Recent Works